Next weekend there’s a Code Retreat in Wroclaw: http://coderetreat.wroclaw.pl. I’ll be there so If you want to cut some code together retreat with us =)

Developer! rid your wip!

October 14, 2010I’m big on eliminating waste, I’m big on minimizing Work In Progress (WIP).

Here’s my story: a colleague asked me to pair with him because he broke the application and he didn’t know why. So we sat together. My first question was:

– “Did the app work before you made your changes?”

– “Yes”

– “Ok, show me the changes you made”

I saw ~150 hundred unknown files, ~20 modified or added files. I said:

– “That’s a lot of changes. I bet you started doing too many changes at once so you ended up with a lot of WIP. Can we revert all the changes you made?”

– “Errr… I guess so. I know what refactoring I wanted to do. We can do it again”

– “Excellent, let’s clean your workspace now”

We reverted the changes. We also discovered that there were some core “VCS ignores” missing (hence so many unknown files in the working copy). We fixed that problem by adding necessary “VCS ignores”. I continued:

– “Ok, the working copy is clean and the application works OK. Let’s kick off the refactoring you wanted to do. However this time, we will do the refactoring in small steps. We will keep the WIP small by doing frequent check-ins on the way.”

– “Sounds good”

Guess what. We didn’t encounter the initial problem at all. We completed the refactoring in ~1 hour doing several check-ins in the process. Previous attempt to the refactoring took much more time due to few hours spent on debugging the issue.

Here’s my advice for a developer who wants to debug less & smile more:

- Understand what is your work in progress; keep WIP under control; keep it small.

- Develop code in small steps.

- Keep your workspace clean, avoid unknown or modified files between check-ins.

subclass-and-override vs partial mocking vs refactoring

January 13, 2009Attention all noble mockers and evil partial mockers. Actually… both styles are evil :) Spy, don’t mock… or better: do whatever you like just keep writing beautiful, clean and non-brittle tests.

Let’s get to the point: partial mocking smelled funny to me for a long time. I thought I didn’t need because frankly, I haven’t found a situation where I could use it.

Until few days ago when I found a place for it. Did I just come to terms with partial mocks? Maybe. Interestingly, partial mock scenario seems to be related with working with code that we don’t have full control of…

I’ve been hacking a new feature for mockito, an experiment which suppose to enhance the feedback from a failing test. On the way, I encountered a spot where partial mocking appeared handy. I decided to code the problem in few different ways and convince myself that partial mocking is a true blessing.

Here is an implementation of a JunitRunner. I trimmed it a little bit so that only interesting stuff stayed. The runner should print warnings only when the test fails. The super.run(notifier) is wrapped in a separate method so that I can replace this method from the test:

How would the test look like? I’m going to subclass-and-override to replace runTestBody() behavior. This will give me opportunity to test the run() method.

It’s ugly but shhh… let’s blame the jUnit API.

The runTestBody() method is quite naughty but I’ve got a powerful weapon at hand: partial mocking. What’s that? Typically mocking consist of using a replacement of the entire object. Partial stands for replacing only the part of real implementation, for example a single method.

Here is the test, using hypothetical Mockito partial mocking syntax. Actually, shouldn’t I call it partial stubbing?

Both tests are quite similar. I test MockitoJUnitRunner class by replacing the implementation of runTestBody(notifier). First example uses test specific implementation of the class under test. Second test uses a kind of partial mocking. This sort of comparison was very nicely done in this blog post by Phil Haack. I guess I came to the similar conclusion and I believe that:

- subclass-and-override is not worse than partial mocking

- subclass-and-override might give cleaner code just like in my example. It all depends on the case at hand, though. I’m sure there are scenarios where partial mocking looks & reads neater.

Hold on a sec! What about refactoring? Some say that instead of partial mocking we should design the code better. Let’s see.

The code might look like this. There is a specific interface JunitTestBody that I can inject from the test. And yes, I know I’m quite bad at naming types.

Now, I can inject a proper mock or an anonymous implementation of entire JunitTestBody interface. I’m not concerned about the injection style because I don’t feel it matters that much here. I’m passing JunitTestBody as an argument.

Let’s draw some conclusions. In this particular scenario choosing refactoring over partial mocking doesn’t really push me towards the better design. I don’t want to get into details but junit API constrains me a bit here so I cannot refactor that freely. Obviously, you can figure out a better refactoring – I’m just yet another java developer. On the other hand, the partial mocking scenario is a very rare finding for me. I believe there might be something wrong in my code if I had to partial mock too often. After all, look at the tests above – can you say they are beautiful? I can’t.

So,

- I cannot say subclass-and-override < partial mocking

- not always refactoring > subclass-and-override/partial mocking

- partial mocking might be helpful but I’d rather not overuse it.

- partial mocking scenario seems to lurk in situations where I cannot refactor that freely.

Eventually, I chose subclass-and-override for my implementation. It’s simple, reads nicer and feels less mocky & more natural.

should I worry about the unexpected?

July 12, 2008The level of validation in typical state testing with assertions is different from typical interaction testing with mocks. Allow me to have a closer look at this subtle difference. My conclusion dares to question the important part of mocking philosophy: worrying about the unexpected.

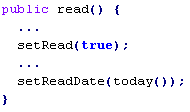

Let me explain by starting from the beginning: a typical interaction based test with mocks (using some pseudo-mocking syntax):

#1

Here is what the test tells me:

– When you read the article

– then the reader.read() should be called

– and NO other method on the reader should be called.

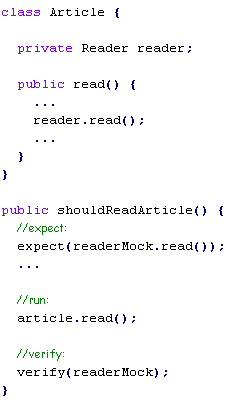

Now, let’s have a look at typical state based test with assertions:

#2

Which means:

– When you read the article

– then the article should be read.

Have you noticed the subtle difference between #1 and #2?

In state testing, an assertion is focused on a single property and doesn’t validate the surrounding state. When the behavior under test changes some additional, unexpected property – it will not be detected by the test.

In interaction testing, a mock object validates all interactions. When the behavior under test calls some additional, unexpected method on a mock – it will be detected and UnexpectedInteractionError is thrown.

I wonder if interaction testing should be extra defensive and worry about the unexpected?

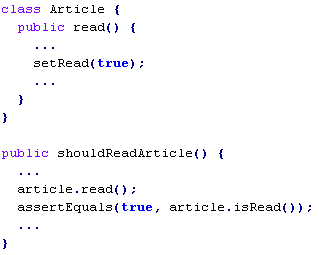

Many of you say ‘yes’ but then how come you don’t do state based testing along this pattern? I’ll show you how:

#3

Which means:

– When you read the article

– then the article should be read

– and no other state on the article should change.

Note that the assertion is made on the entire Article object. It effectively detects any unexpected state changes (e.g: if read() method changes some extra property on the article then the test fails). This way a typical state based test becomes extra defensive just like typical test with mocks.

The thing is state based tests are rarely written like that. So the obvious question is how come finding the unexpected is more important in interaction testing than in state testing?

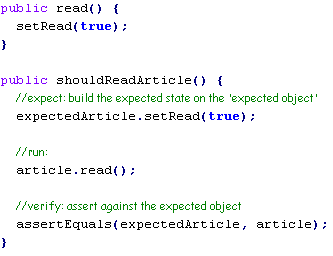

Let’s consider pros & cons. Surely detecting the unexpected seems to add credibility and quality to the test. Sometimes however, it just gets in the way, especially when doing TDD. To explain it clearer let’s get back to the example #3: the state based test with detecting the unexpected enabled. Say I’d like to test-drive a new feature:

#4

I run the tests to find out that newly added test method fails. It’s time to implement the feature:

I run the test again and the new test passes now but hold on… the previous test method fails! Note that the existing functionality is clearly not broken.

What happened? The previous test detected the unexpected change on the article – setting the date. How can I fix it?

1. I can merge both test methods into one which is probably a good idea in this silly example. However, many times I really want to have small, separate test methods that are focused around behavior. One-assert-per-test people do it all the time.

2. Finally, I can stop worrying about the unexpected and focus on testing what is really important:

public shouldSetReadDateWhenReading() {

article.read();

assertEquals(today(), article.getReadDate());

}

public shouldReadArticle() {

article.read();

assertTrue(article.isRead());

}

Ok, I know the example is silly. But it is only to explain why worrying about unexpected may NOT be such a good friend of TDD or small&focused test methods.

Let’s get back to mocking.

Most mocking frameworks detect the unexpected by default. When new features are test-driven as new test methods, sometimes existing tests start failing due to unexpected interaction errors. What happens next?

1. Junior developers copy-paste expectations from one test to another making the test methods overspecified and less maintainable.

2. Veteran developers modify existing tests and change the expectations to ignore some/all interactions. Most mocking frameworks enables developers to ignore expectations selectively or object-wise. Nevertheless, it is still a bit of a hassle – why should I change existing tests when the existing functionality is clearly not broken? (like in example #4 – functionality not broken but the test fails). The other thing is that explicitly ignoring interactions is also a bit like overspecification. After all, to ignore something I prefer just not to write ANYTHING. In state based tests if I don’t care about something I don’t write an assertion for that. It’s simple and so natural.

To recap: worrying about the unexpected sometimes leaves me with overspecified tests or less comfortable TDD. Now, do I want to trade it for the quality? I’m talking about the quality introduced by extra defensive test?

The thing is I didn’t find a proof that the quality improved when every test worried about the unexpected. That’s why I prefer to write extra defensive tests only when it’s relevant. That’s why I really like state based testing. That’s why I prefer spying to mocking. Finally, that’s why I don’t write verifyNoMoreInteractions() in every Mockito test.

What do you think? Have you ever encountered related problems when test-driving with mocks? Do you find the quality improved when interaction testing worries about the unexpected? Or perhaps should state testing start worrying about the unexpected?

10 rules of (unit) testing

January 31, 2008There you go, those are my 10 rules of (unit) testing. It’s nothing exciting, really. Everyone is so agile these days. Are you agile? I bet you’d say: I was born agile.

Here I come with my own 10 commandments. I came up with those while doing research on mock frameworks (haven’t you heard about Mockito, yet? it’s the ultimate tdd climax with java mocks). I browsed so much of our project’s test code that I feel violated now (codebase is nice, though).

- If a class is difficult to test, refactor the class. Precisely, split the class if it’s too big or if has too many dependencies.

- It’s not only about test code but I need to say that anyway: Best improvements are those that remove code. Be budget-driven. Improve by removing. The only thing better than simple code is no code.

- Having 100% line/branch/statement/whatever coverage is not an excuse to write dodgy, duplicated application code.

- Again, not only about test code: Enjoy, praise and present decent test code to others. Discuss, refactor and show dodgy test code to others.

- Never forget that test code is production code. Test code also loves refactoring.

- Hierarchies are difficult to test. Avoid hierarchies to keep things simple and testable. Reuse-by-composition over reuse-by-inheritance.

- One assert per test method is a bit fanatical. However, be reasonable and keep test methods small and focused around behavior.

- Regardless of what mock framework you use, don’t be afraid of creating hand crafted stubs/mocks. Sometimes it’s just simpler and the output test code is clearer.

- If you have to test private method – you’ve just promoted her to be public method, potentially on different object.

- Budget your tests not only in terms of lines of code but also in terms of execution time. Know how long your test run. Keep it fast.

Number #1 is my personal pet. Everybody is so test-driven but so little really care about #1 in practice. Best classes, frameworks or systems offer painless testing because they’re designed & refactored to be testable. Let the code serve the test and you will produce delightful system.

Number #7 is a funny one (‘Cos we need a little controversy). The whole world rolls in the opposite direction. Maybe I just don’t get it… yet. Or maybe it’s because I paired with young one-assert-per-test guy once. He cunningly kept writing test methods to check if constructor returns not-null value. If I had to play this game and chose my one-something-per-test philosophy I’d steal MarkB’s idea: one-behaviour-per-test. After all, It’s all about behavior…

is private method an antipattern

January 5, 2008After a conversation with my friend and after a cursory google search, here are my thoughts:

‘Private method a smell’ seemed to be ignited with the birth of TDD. Test-infected people wanted to know how to test their private methods. Gee… it’s not easy so the question evolved from ‘how‘ into ‘why‘: Why to test private method? Most of TDDers would answer instantly: don’t do it. Yet again TDD changed the way we craft software and re-evaluated the private methods :)

Test-driven software tends to have less private methods. Shouldn’t we have a metric like: private methods to public methods ratio? I wonder what would be the output for some open source projects (like spring – famous for its clean code).

Test-driven software tends to have less private methods. Shouldn’t we have a metric like: private methods to public methods ratio? I wonder what would be the output for some open source projects (like spring – famous for its clean code).

So, is private method an antipattern or not!?

If the class is so complex that it needs private methods, shouldn’t we aim to pull some of that complexity into its own class?

The code never lies: We looked at several distinctly big classes and put their private methods under magnifying glass. Guess what… Some of the private methods where clear candidates to refactor out to separate class. In one case we even moved whole private method, without a single change, into separate class ;)

Privacy may be an antipattern. Look at your private methods – I bet some of them should be public methods of different objects.

consistency over elegance

December 29, 2007Usually developers spend more time on reading code rather than writing code. Consistency makes the code more readable.

Is it better to do things consistently wrongly than erratically well? Maybe not in life but when it comes to software. Here are my ratings:

:-D consistently well

:-) consistently wrongly

:-| erratically well

:-( erratically wrongly

Consistency wins.

Sometimes particular anti-pattern consistently infests whole application. There is no point in throwing bodies in refactoring it because the cost may be little compared to the gain. Anti-pattern may be well-known and in general not extremely harmful. Refactoring it in one place may seem decent but introduces inconsistency with all the other places. I value overall consistency over single-shot elegance.

There’s no rule without exception, right? Sometimes the goal is to implement something in completely different way, to implement an eye-opener, to create “the new way”. Then one could relay on distributed-refactoring spirit of the team and let others join refactoring feast. If the spirit evaporated :(, one could always make using “the old way” deliberately embarrassing or just frikin’ difficult.

Consistency rule helps in all those hesitations a developer has on daily basis. Ever felt like two-headed ogre from Warcraft?

Consistency rule helps in all those hesitations a developer has on daily basis. Ever felt like two-headed ogre from Warcraft?

“This way, no that way!” – the heads argue.

Yes, you can implement stuff in multiple ways… My advice is: know your codebase and be consistent. Choose the way that was already chosen.

Being consistent is important but not when it goes against The Common Sense. Obviously ;)

guilty of untestability

December 19, 2007If the class is difficult to test don’t blame your test code or deficiencies in mock/test libraries. Instead try very hard to make the class under test easy to test.

Is class hard to test? Then it’s the class itself to blame, never the test code.

The punishment is simple: merciless refactoring.

Yet so many times the test is found guilty and unjustly sentenced to refactoring or improving.

Here are some real-life examples – I saw it, I moaned, I preached (poor soul who paired with me that day):

- Class calls static methods. New patterns of mocking static methods are introduced. Yet another mocking framework (or supporting code) is added. Instead, static class could be refactored into genuine citizen of OO world where dependency injection really does miracles.

Ok, I didn’t really see that one. But I read about it ;) - Class has many dependencies. The builder is introduced to create instances with properly mocked/stubbed dependencies. The effort to refactor test code and introduce builder could be spend on splitting the class so that it is smaller, has less dependencies and doesn’t need a builder any more.

- Class has many dependencies yet again. The test class is enhanced with bunch of private utility methods to help in setting up dependencies. Yet again I would rather split class under test…

- Test method is huge. Test method is decomposed into smaller methods. Instead I would look at responsibility under the test. Perhaps responsibility/method under test is just bloated and solves multiple different problems. I’d rather delegate different responsibilities to different classes.

All of that goes down to very simple principles I follow.

If I find testing difficult I look for reasons in the class under test. If the test smells I look for reasons in the class under test. Class under test is always guilty until proven.

I’m not saying to stop refactoring test code, I’m saying to focus on class under test. Don’t make test code smarter if you can make the code easier to test. If you spend too much time on figuring out how to test something it means code under test sucks.

For me, the quality of the class is reflected by the clarity and simplicity of its test.

Here are some warnings you can find in the test code.

- too big setup() method

- too many fields

- too big test method

- too many test methods

- too many private utility methods

- too…

All of them smell and require refactoring. You can refactor test code, make it smarter and better. You can also refactor code under test so that the test doesn’t have to be smart and becomes simple. Simple code doesn’t smell. Usually. ;)

guilty of untestability – intro :)

December 18, 2007Tomo writes how to mock static methods and how cool is JMockit. This gives me an opportunity to write about something loosely linked with his post.

No offence Tomo ;), but I don’t believe that the class is difficult to test because I don’t know enough mock libraries. The class is difficult to test because its design sucks. Static methods are difficult to mock and I am really pleased with that. At least developers will avoid them in order to keep the code more testable. When you introduce new shiny pattern of mocking static methods other developers may follow you. That means more and more static methods.

However, there is something else that strikes me. It’s the acrobatics around the test code in order to test a class. It should be other way around: if a class is difficult to test then stop hacking the test code and refactor the class. The best approach is obviously TDD – you always end up with testable classes.

I’m not talking about static methods any more? Good. I’m not really against static methods. I love them, we should all love them and keep them safe on 3.5 floppies among other turbo pascal code.

This supposed to be an intro to more interesting stuff but ragging static methods gave me weird and unexpected pleasure. Oh right, I’m not that zealous to treat all static methods with a flame thrower. Stuff like utility libraries – they’re fine and I’ve never needed to mock them. Tomo defends static methods bravely (see comment below). He is right but mocking static methods still smells funny… If the class is difficult to test I just wouldn’t challenge the test code. I’d rather try very hard to make the class under test easy to test.

I rant about it here.

I’m hard-coded

November 17, 2007About a year ago I paired on the certain problem with a client tech lead. I wanted to hard-code some stuff in a PHP class, he wanted to externalize this configuration to the database. After all, he convinced me that we should do it in the the database. Cool.

It appeared that one of the managers heard the whole discussion. Then, during iteration kick-offs and some other different occasions he used to say: “please don’t hard-code it“.

I asked one dev lately why did he externalize certain piece of configuration (I could bet my head that it was never gonna change).

He said: “I didn’t want to hard-code it and it’s only 2 more properties in the configuration file”.

So I told him about YAGNI. And I told him that our wonderful configuration file has now 230 properties. Oh by the way, we use 10 of them.

Hard-coding is such a dirty word.

On my current project, only Spring is configured in 120 xml files, proud 1 MB in total. I think entire universe is configured there.

My theory is that many people think that if it’s not in xml then it’s hard-coded. And since hard-coding is such a dirty word… well everything ends up in xml or properties file.

Maybe I’m weird but I prefer to configure my java apps using java, ruby apps using ruby and so on. Fortunately I am not the only one!

- In guice you configure dependencies in java code. I waited too long for that. Thank you guice.

- Rails apps have only few lines of configuration (database settings) and everything else is pure ruby code.

Configuring is such a noble thing. It’s just wise to remember that configuring is not equal to xml file. For java apps I tend to configure in java:

- it’s smaller, more KISSy (no supporting code to read configuration).

- lets me use refactoring tools to change configuration (e.g: in Spring you have to keep xmls in sync with your java refactorings).

- lets me use compiler to validate configuration (e.g: there is absolutely no way you can set “dummy” to the integer field). I can focus on solving problem rather than implementing configuration validation.

- usually doesn’t require documentation. Provided that class/method/variable names in your code are sensible. And I’m sure they are.

In the old days distinction between hard-coding and configuring was clear: written in C++ class = hard-coded = bad; written in script/properties file = configured = good. Unfortunately this understanding of hard-coding is still valid today: some think that having a constant in java class is dirty, let’s then add a new property to the system!

Sometimes externalizing configuration is perfectly valid, but it applies only to small number of properties. Those are usually: different on various environments, changed frequently, changed by non-developers, etc.

In the world of Configuration Over Hard-coding my stance is Hard-coding Over Over-engineering. Sounds a bit stupid but I couldn’t think of anything better.

Happy hard-coding!

Posted by szczepiq

Posted by szczepiq