I will have a pleasure presenting Mockito at Jazoon in Zurich. My session is on Thursday – make sure you don’t miss it. During the session I’m going to show few slides and do live TDD with Mockito & other mocking frameworks. I’m going to use Infinitest. Guys, it’s huge. Brett Schuchert wrote about it, Bartek told me to try it several times. Finally I did and I must say I’m impressed. Infinitest is like transcending to a real TDD.

subclass-and-override vs partial mocking vs refactoring

January 13, 2009Attention all noble mockers and evil partial mockers. Actually… both styles are evil :) Spy, don’t mock… or better: do whatever you like just keep writing beautiful, clean and non-brittle tests.

Let’s get to the point: partial mocking smelled funny to me for a long time. I thought I didn’t need because frankly, I haven’t found a situation where I could use it.

Until few days ago when I found a place for it. Did I just come to terms with partial mocks? Maybe. Interestingly, partial mock scenario seems to be related with working with code that we don’t have full control of…

I’ve been hacking a new feature for mockito, an experiment which suppose to enhance the feedback from a failing test. On the way, I encountered a spot where partial mocking appeared handy. I decided to code the problem in few different ways and convince myself that partial mocking is a true blessing.

Here is an implementation of a JunitRunner. I trimmed it a little bit so that only interesting stuff stayed. The runner should print warnings only when the test fails. The super.run(notifier) is wrapped in a separate method so that I can replace this method from the test:

How would the test look like? I’m going to subclass-and-override to replace runTestBody() behavior. This will give me opportunity to test the run() method.

It’s ugly but shhh… let’s blame the jUnit API.

The runTestBody() method is quite naughty but I’ve got a powerful weapon at hand: partial mocking. What’s that? Typically mocking consist of using a replacement of the entire object. Partial stands for replacing only the part of real implementation, for example a single method.

Here is the test, using hypothetical Mockito partial mocking syntax. Actually, shouldn’t I call it partial stubbing?

Both tests are quite similar. I test MockitoJUnitRunner class by replacing the implementation of runTestBody(notifier). First example uses test specific implementation of the class under test. Second test uses a kind of partial mocking. This sort of comparison was very nicely done in this blog post by Phil Haack. I guess I came to the similar conclusion and I believe that:

- subclass-and-override is not worse than partial mocking

- subclass-and-override might give cleaner code just like in my example. It all depends on the case at hand, though. I’m sure there are scenarios where partial mocking looks & reads neater.

Hold on a sec! What about refactoring? Some say that instead of partial mocking we should design the code better. Let’s see.

The code might look like this. There is a specific interface JunitTestBody that I can inject from the test. And yes, I know I’m quite bad at naming types.

Now, I can inject a proper mock or an anonymous implementation of entire JunitTestBody interface. I’m not concerned about the injection style because I don’t feel it matters that much here. I’m passing JunitTestBody as an argument.

Let’s draw some conclusions. In this particular scenario choosing refactoring over partial mocking doesn’t really push me towards the better design. I don’t want to get into details but junit API constrains me a bit here so I cannot refactor that freely. Obviously, you can figure out a better refactoring – I’m just yet another java developer. On the other hand, the partial mocking scenario is a very rare finding for me. I believe there might be something wrong in my code if I had to partial mock too often. After all, look at the tests above – can you say they are beautiful? I can’t.

So,

- I cannot say subclass-and-override < partial mocking

- not always refactoring > subclass-and-override/partial mocking

- partial mocking might be helpful but I’d rather not overuse it.

- partial mocking scenario seems to lurk in situations where I cannot refactor that freely.

Eventually, I chose subclass-and-override for my implementation. It’s simple, reads nicer and feels less mocky & more natural.

more of devoxx, more on interfaces

December 9, 2008I gave my talk at devoxx and hopefully those who attended were not offended by my weird sense of humor :) Guys, we need to have more fun in IT…

Shortly after I gave the talk I had an interesting discussion about the coding style some folks call “coding to interfaces”. It started when I was asked if Mockito is able to mock concrete classes? The answer is yes, Mockito doesn’t care if you mock an interface or a class. Mockito can do it thanks to primordial voodoo magic only ancient shamans understand these days (you guessed right – it’s the cglib library).

Here starts the controversy. Should Mockito allow to mock only interfaces and hence promote “coding to interfaces”? Dan North, a respected IT guru and Mockito friend, said between the lines of one of his articles:

it allows me to mock concrete classes which I think is a retrograde step – remember kids, mock roles, not objects

What about the guy who approached me after the Mockito session told me a story about the codebase deeply proliferated with interfaces? Interfaces were introduced by developers not because they wanted to mock roles, not objects but because the mocking framework they used “promoted” coding to interfaces. This codebase was not very friendly.

To me, interface frenzy makes the codebase hard to browse, for example due to extra effort required to find implementation. Also, too many interfaces dilute the actual meaning of those interfaces that are really important. Therefore sometimes I mock classes, sometimes I mock interfaces and Mockito deals with it transparently. There are situations where I always use interfaces but let’s not bring it here.

I remember some old-school java book I read years ago. It read: “every java class should have an interface“. Don’t believe everything you read and the best example would be this blog, wouldn’t it?.

Use the coding style that works best for you. Drink mockitos only with interfaces if you like it this way.

I wish there was a mocking framework…

November 3, 2008Let’s start from the beginning. Lately, I’ve been quite busy (perhaps lazy is a better word, though) and I couldn’t find time to sharply reply to few interesting blog articles. Dan North became a friend of Mockito and wrote about the end of endotesting. His post sparked few interesting comments. Steve Freeman wrote:

But, it also became clear that he wrote Mockito to address some weak design and coding habits in his project and that the existing mocking frameworks were doing exactly their job of forcing them into the open. How a team should respond to that feedback is an interesting question.

Well, I wrote Mockito because I needed a simple tool that didn’t get in the way and gave readable & maintainable code.

In fact, the syntactic noise in jMock really helps to bring this out, whereas it’s easy for it to get lost with easymock and mockito.

When something is hard to test it gives excellent feedback about the quality of the code under test. If you’re a developer then your main responsibility is to respond to this feedback. jMock guys came up with this excellent metaphor: “you should listen to your tests“.

I wish there was a mocking framework which gave positive feedback when the production code was clean but gave negative feedback when the design was weak. When I used jMock I had an impression that the feedback is negative all the time, regardless of the quality of the design. Good code or bad code – the syntactic noise was present in every test. If the feedback is always negative how do I know when the design is weak?

Patrick Kua writes in his blog:

That’s why that even though Mockito is a welcome addition to the testing toolkit, I’m equally frightened by its ability to silence the screams of test code executing poorly designed production code

Mockito tends to give positive feedback. It keeps the syntactic noise low so the tests are usually clean regardless of the design. If the feedback is always positive how do I know when the design is weak?

So, what brings out design issues better – jMock or Mockito? Go figure: the first gives too much noise, the second is too silent. Before you join the debate, please note that the discussion is fairly academic. After all it is nothing else but looking at the mocking syntax and thinking how it influences the production code.

Can we really say that the quality of the production code is different when tested with different mocking framework? We strive to build fabulous mocking frameworks but high quality code is the result of skill, experience and so much more than just a choice between Mockito or jMock, TypeMock or RhinoMock, expectations before or after, etc.

At this moment, for unknown reason, one of the readers suddenly remembered the test he wrote last week. The one that required 17 mock objects. Which one? The one where the mock is told to return the mock, to return the mock, to return the mock, to return the mock, to return the mock. Then assert something equals seven. Oh yeah, that one! The test looked quite horrible but hey, you know what they say, better bad test than no test. Obviously, this ugly jMock made the test unreadable. The design was absolutely flawless because there were many patterns used. Fortunately, the team started using Mockito the following week! The rumor was that Mockito test became unreadable only if the number of mocks per test (MPT) exceeded 25. Awesome! Next week, the eager developer was unpleasantly surprised with Mockito. The test with MPT 17 was looking as horrible. To solve the problem, the team decided to install EasyMock.

I haven’t participated in enough java projects but I dare to say this: The quality of design was no better when we did hand mocking, EasyMocking, jMocking or drinking Mockitos. Somehow drinking Mockitos was much pleasant experience, maybe because it’s darn good drink.

No hangover guaranteed. (Eastern Europeans only).

mockito after agile2008

August 27, 2008I spoke about spying vs mocking and Mockito library at the agile 2008.

The turnout was quite good given that there were almost 40 sessions in parallel (mostly about big things and with big-name presenters). I met Johannes Link, the creator of a javascript spying framework in the spirit of Mockito. Go Test Spies! :). Between sessions, we spoke with agile coaches who already have tried Mockito and we received positive feedback (and they didn’t know we’re the authors beforehand!).

I hope I managed to popularize Mockito and the spying approach.

Someone asked me what’s the user base of Mockito. Although I don’t know the answer I can throw some figures at you:

~500 downloads last month (does not include Maven users)

~2300 visits to Mockito last month (thank you, Google Analytics)

One of the attendees asked me for the slides from my session at Agile2008. Bear in mind that they have less value if you didn’t attend the presentation – some slides are pretty minimal or they are just open questions. Anyway, here are the slides. Oh, and the session title “Don’t give up on mocking” is a complete mess. What I really want to convey is “Give up on mocking, go for spying”. One of my older posts sheds some light why I messed the title up.

should I worry about the unexpected?

July 12, 2008The level of validation in typical state testing with assertions is different from typical interaction testing with mocks. Allow me to have a closer look at this subtle difference. My conclusion dares to question the important part of mocking philosophy: worrying about the unexpected.

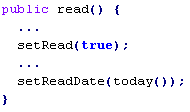

Let me explain by starting from the beginning: a typical interaction based test with mocks (using some pseudo-mocking syntax):

#1

Here is what the test tells me:

– When you read the article

– then the reader.read() should be called

– and NO other method on the reader should be called.

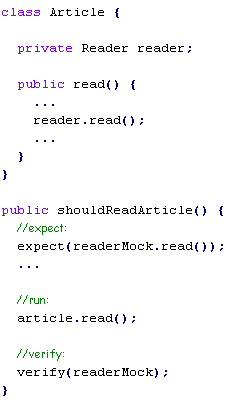

Now, let’s have a look at typical state based test with assertions:

#2

Which means:

– When you read the article

– then the article should be read.

Have you noticed the subtle difference between #1 and #2?

In state testing, an assertion is focused on a single property and doesn’t validate the surrounding state. When the behavior under test changes some additional, unexpected property – it will not be detected by the test.

In interaction testing, a mock object validates all interactions. When the behavior under test calls some additional, unexpected method on a mock – it will be detected and UnexpectedInteractionError is thrown.

I wonder if interaction testing should be extra defensive and worry about the unexpected?

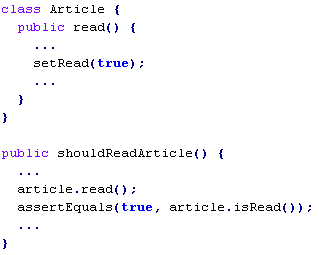

Many of you say ‘yes’ but then how come you don’t do state based testing along this pattern? I’ll show you how:

#3

Which means:

– When you read the article

– then the article should be read

– and no other state on the article should change.

Note that the assertion is made on the entire Article object. It effectively detects any unexpected state changes (e.g: if read() method changes some extra property on the article then the test fails). This way a typical state based test becomes extra defensive just like typical test with mocks.

The thing is state based tests are rarely written like that. So the obvious question is how come finding the unexpected is more important in interaction testing than in state testing?

Let’s consider pros & cons. Surely detecting the unexpected seems to add credibility and quality to the test. Sometimes however, it just gets in the way, especially when doing TDD. To explain it clearer let’s get back to the example #3: the state based test with detecting the unexpected enabled. Say I’d like to test-drive a new feature:

#4

I run the tests to find out that newly added test method fails. It’s time to implement the feature:

I run the test again and the new test passes now but hold on… the previous test method fails! Note that the existing functionality is clearly not broken.

What happened? The previous test detected the unexpected change on the article – setting the date. How can I fix it?

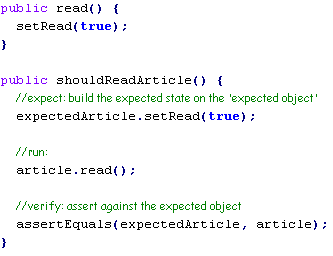

1. I can merge both test methods into one which is probably a good idea in this silly example. However, many times I really want to have small, separate test methods that are focused around behavior. One-assert-per-test people do it all the time.

2. Finally, I can stop worrying about the unexpected and focus on testing what is really important:

public shouldSetReadDateWhenReading() {

article.read();

assertEquals(today(), article.getReadDate());

}

public shouldReadArticle() {

article.read();

assertTrue(article.isRead());

}

Ok, I know the example is silly. But it is only to explain why worrying about unexpected may NOT be such a good friend of TDD or small&focused test methods.

Let’s get back to mocking.

Most mocking frameworks detect the unexpected by default. When new features are test-driven as new test methods, sometimes existing tests start failing due to unexpected interaction errors. What happens next?

1. Junior developers copy-paste expectations from one test to another making the test methods overspecified and less maintainable.

2. Veteran developers modify existing tests and change the expectations to ignore some/all interactions. Most mocking frameworks enables developers to ignore expectations selectively or object-wise. Nevertheless, it is still a bit of a hassle – why should I change existing tests when the existing functionality is clearly not broken? (like in example #4 – functionality not broken but the test fails). The other thing is that explicitly ignoring interactions is also a bit like overspecification. After all, to ignore something I prefer just not to write ANYTHING. In state based tests if I don’t care about something I don’t write an assertion for that. It’s simple and so natural.

To recap: worrying about the unexpected sometimes leaves me with overspecified tests or less comfortable TDD. Now, do I want to trade it for the quality? I’m talking about the quality introduced by extra defensive test?

The thing is I didn’t find a proof that the quality improved when every test worried about the unexpected. That’s why I prefer to write extra defensive tests only when it’s relevant. That’s why I really like state based testing. That’s why I prefer spying to mocking. Finally, that’s why I don’t write verifyNoMoreInteractions() in every Mockito test.

What do you think? Have you ever encountered related problems when test-driving with mocks? Do you find the quality improved when interaction testing worries about the unexpected? Or perhaps should state testing start worrying about the unexpected?

London Geek Night

April 27, 2008Thoughtworks office in London holds a Geek Night on 28/05/2008.

It’s open for everyone so don’t plan anything for the last Wednesday of May.

The topic concentrates around mocking. There will be jMock guys selling jMock and Felix talking about Mockito. Trust me, even if you know everything about mocking, this session will be interesting.

I’m not going to be there because in few days I’m moving to a different country :)

is there a difference between asking and telling?

April 26, 2008These days testing interactions is getting very common. Mocking tools are becoming a necessary supplement to xUnit libraries precisely to facilitate testing interactions. I cannot imagine writing software without assert() (to test state) and verify() (to test interactions).

I’m about to show that it is very useful to distinguish two basic kinds of interactions: asking an object of data and telling an object to do something. Fair enough, it may sound obvious but most mocking frameworks treat all interactions equally and don’t take advantage of the distinction.

Let’s have a look at this piece of code:

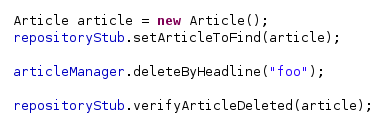

Before mocking was invented we manually wrote stubs and tests looked like this:

Sometimes I miss those days before mocking. Test code looked so nice… The only problem was that hand writing every stub was quite cumbersome and produced tons of extra code.

Anyway, let’s try to extract a pattern from above test code:

1. when the repository is asked to find “foo” article, then return article

2. deleteByHeadline(“foo”);

3. make sure repository was told to delete article

So, to generalize it:

1. stub

2. run

3. assert

Now, let’s put it into words:

Interaction that is asking an object for data (or indirect input) is basically providing necessary input for processing. This kind of interaction is meant to be stubbed before running code under test (exercising the behaviour).

Interaction that is telling an object to do something (or indirect output) is basically the outcome of processing. This kind of interaction is meant to be asserted after running code under test.

Testing interactions like that is more natural and produces better code. Lessons learned from the old ways of testing interactions are implemented in Mockito:

Controversies

Above approach may seem controversial (quoting interesting para):

Then, when you do verify(…), you have to basically repeat the exact same expression you put in stub(…). This might work if you only have a couple calls to verify, but for anything else, it will be a lot of repeated code, I’m afraid

Basically, the controversy is about repeating the same expression in stub() and verify():

Do I really have to repeat the same expression? After all, stubbed interactions are verified implicitly. The execution flow of my own code does it completely for free. Aaron Jensen also noticed that:

If you’re verifying you don’t need to stub unless of course that method returns something that is critical to the flow of your test (or code), in which case you don’t really need to verify, because the flow would have verified.

Just to recap: there is no repeated code.

But what if an interesting interaction shares the characteristic of both asking and telling? Do I have to repeat interactions in stub() and verify()? Will I end up with duplicated code? Not really. In practice: If I stub then it is verified for free, so I don’t verify. If I verify then I don’t care about the return value, so I don’t stub. Either way, I don’t repeat myself. In theory though, I can imagine a rare case where I do verify a stubbed interaction, for example to make sure the stubbed interaction happened exactly n times. But this is a different aspect of behavior and apparently an interesting one. Therefore I want to be explicit and I am perfectly happy to sacrifice one extra line of code…

Finally…

Mockito recognises the difference between asking and telling, hence stubbing is separated from verification. Stubbing becomes a part of setup whereas verification a part of assertions. This produces cleaner code and gives more natural testing/TDD. It doesn’t increase code duplication as one might think. It’s just one of the ideas implemented in Mockito to make the mocking experience a little bit better. It’s not a silver bullet, though. Decent code is easily testable regardless of any mocking tools.

let’s spy

March 21, 2008Following Gerard Meszaros: “Good names help us communicate the concepts”.

There is this Test Spy framework I worked on lately. Unfortunately, it doesn’t use ‘test spy’ metaphor at all. It talks about ‘mocks’ all the time. Some even say that the name of the library has something to do with mocking…

Was I just a foolish ignorant who didn’t want to acknowledge different kinds of mocks. Ouch! I should say: Test Doubles. In case you haven’t heard about Doubles: all those substitutes of real objects that make testing simpler or faster or more maintainable. I like them because they shrink the unit under test and make it easier to test.

I like simplicity. Introducing new concepts doesn’t make things simple. Do I really need Double, Dummy, Fake, Mock, Spy and Stub to write decent code? On the other hand, I definitely need them to communicate how to write decent code.

At the back of my weird mind I used to think that Test Double breakdown was a bit disconnected from the reality where subtle differences are blurred (and the existing tools/libraries didn’t really reduce the confusion). I tried to keep things plain but I reached the boundary: trying to keep things simple (and sticking to mocks and stubs) actually could have increased the confusion and made things complicated.

![]() That’s why I’m about to sort out Mockito terminology. Better late than never, right?

That’s why I’m about to sort out Mockito terminology. Better late than never, right?

In all of my previous posts ‘Mockito mock’ should be read ‘Test Spy’. But what’s the Test Spy anyway? Or better: what’s the difference between a Test Spy and a Mock? Both serve verifying interactions but the Spy is simple, lightweight and it gives very natural testing experience, close to good old assertions. Sounds interesting? Have a look at some of my previous rants or read this excellent post by Hamlet D’Arcy (don’t miss Collaborator Continuum).

Let’s get back to my favourite Test Spy Framework. Do you think following changes makes things simpler and easier to understand (I don’t really know the answer…):

@Mock -> @Spy

mock(Foo.class) -> createSpy(Foo.class)

Mockito @ Agile 2008

February 24, 2008Just submitted session proposal for Agile 2008. It’s about Mockito (yes, I know I’m boring).

Competition is fierce and without proper (read: famous) name it will be difficult to get approved. But anyway: deadline for submissions is tomorrow. If you have any feedback about my session and you are willing to share it with me before end of tomorrow – I would be grateful.

I’m gonna present short version of this session during coming Last Thursday at Holborn office – see you there!

Posted by szczepiq

Posted by szczepiq