The level of validation in typical state testing with assertions is different from typical interaction testing with mocks. Allow me to have a closer look at this subtle difference. My conclusion dares to question the important part of mocking philosophy: worrying about the unexpected.

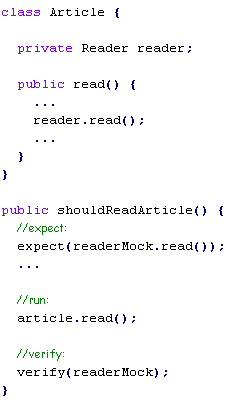

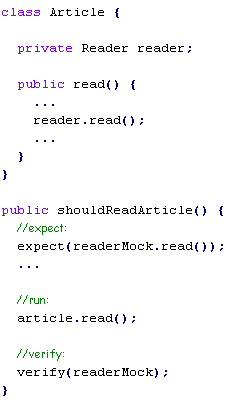

Let me explain by starting from the beginning: a typical interaction based test with mocks (using some pseudo-mocking syntax):

#1

Here is what the test tells me:

– When you read the article

– then the reader.read() should be called

– and NO other method on the reader should be called.

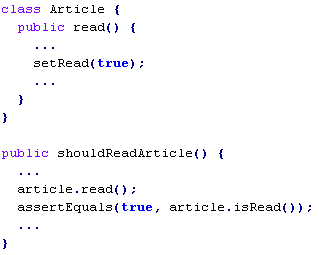

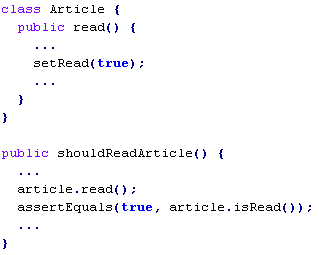

Now, let’s have a look at typical state based test with assertions:

#2

Which means:

– When you read the article

– then the article should be read.

Have you noticed the subtle difference between #1 and #2?

In state testing, an assertion is focused on a single property and doesn’t validate the surrounding state. When the behavior under test changes some additional, unexpected property – it will not be detected by the test.

In interaction testing, a mock object validates all interactions. When the behavior under test calls some additional, unexpected method on a mock – it will be detected and UnexpectedInteractionError is thrown.

I wonder if interaction testing should be extra defensive and worry about the unexpected?

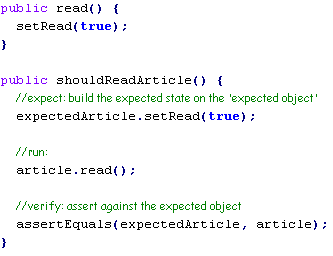

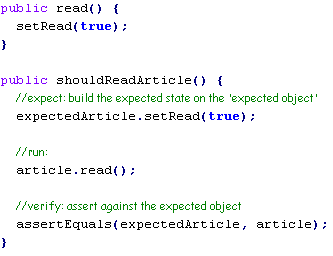

Many of you say ‘yes’ but then how come you don’t do state based testing along this pattern? I’ll show you how:

#3

Which means:

– When you read the article

– then the article should be read

– and no other state on the article should change.

Note that the assertion is made on the entire Article object. It effectively detects any unexpected state changes (e.g: if read() method changes some extra property on the article then the test fails). This way a typical state based test becomes extra defensive just like typical test with mocks.

The thing is state based tests are rarely written like that. So the obvious question is how come finding the unexpected is more important in interaction testing than in state testing?

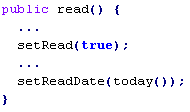

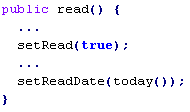

Let’s consider pros & cons. Surely detecting the unexpected seems to add credibility and quality to the test. Sometimes however, it just gets in the way, especially when doing TDD. To explain it clearer let’s get back to the example #3: the state based test with detecting the unexpected enabled. Say I’d like to test-drive a new feature:

#4

I run the tests to find out that newly added test method fails. It’s time to implement the feature:

I run the test again and the new test passes now but hold on… the previous test method fails! Note that the existing functionality is clearly not broken.

What happened? The previous test detected the unexpected change on the article – setting the date. How can I fix it?

1. I can merge both test methods into one which is probably a good idea in this silly example. However, many times I really want to have small, separate test methods that are focused around behavior. One-assert-per-test people do it all the time.

2. Finally, I can stop worrying about the unexpected and focus on testing what is really important:

public shouldSetReadDateWhenReading() {

article.read();

assertEquals(today(), article.getReadDate());

}

public shouldReadArticle() {

article.read();

assertTrue(article.isRead());

}

Ok, I know the example is silly. But it is only to explain why worrying about unexpected may NOT be such a good friend of TDD or small&focused test methods.

Let’s get back to mocking.

Most mocking frameworks detect the unexpected by default. When new features are test-driven as new test methods, sometimes existing tests start failing due to unexpected interaction errors. What happens next?

1. Junior developers copy-paste expectations from one test to another making the test methods overspecified and less maintainable.

2. Veteran developers modify existing tests and change the expectations to ignore some/all interactions. Most mocking frameworks enables developers to ignore expectations selectively or object-wise. Nevertheless, it is still a bit of a hassle – why should I change existing tests when the existing functionality is clearly not broken? (like in example #4 – functionality not broken but the test fails). The other thing is that explicitly ignoring interactions is also a bit like overspecification. After all, to ignore something I prefer just not to write ANYTHING. In state based tests if I don’t care about something I don’t write an assertion for that. It’s simple and so natural.

To recap: worrying about the unexpected sometimes leaves me with overspecified tests or less comfortable TDD. Now, do I want to trade it for the quality? I’m talking about the quality introduced by extra defensive test?

The thing is I didn’t find a proof that the quality improved when every test worried about the unexpected. That’s why I prefer to write extra defensive tests only when it’s relevant. That’s why I really like state based testing. That’s why I prefer spying to mocking. Finally, that’s why I don’t write verifyNoMoreInteractions() in every Mockito test.

What do you think? Have you ever encountered related problems when test-driving with mocks? Do you find the quality improved when interaction testing worries about the unexpected? Or perhaps should state testing start worrying about the unexpected?

Posted by szczepiq

Posted by szczepiq